ChatGPT on Your Data

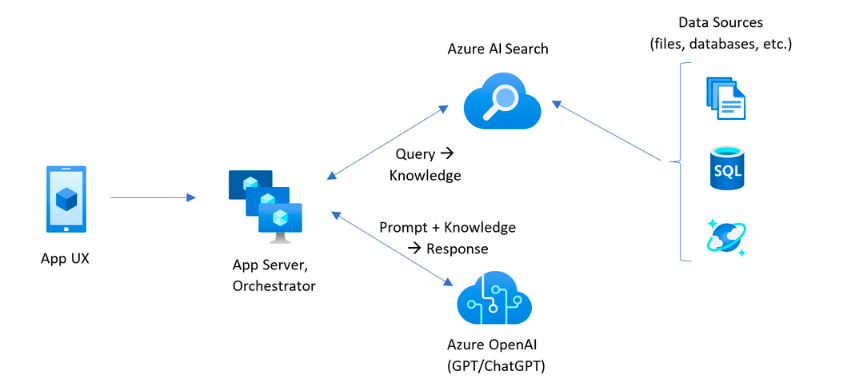

"ChatGPT on Your Data" is a specialized variant of the ChatGPT model, designed

to be tailored for specific users or organizations by incorporating their private data into the AI's knowledge base by using Retrieval-Augmented Generation.

What is Retrieval-Augmented Generation (RAG)?

Retrieval-Augmented Generation (RAG) is a technique used in natural language processing and involves two major components:

Retrieval: The system retrieves relevant documents or information from a large dataset. This step is crucial for finding the most pertinent information related to the query or context. It's like searching through a vast library to find the books or pages that have the answers.

Generation: After retrieving the relevant information, a language generation model (like GPT) uses this information to generate a response. This step is where the model synthesizes the retrieved data into a coherent and contextually appropriate answer or content.

The RAG approach combines the strengths of both retrieval and generation models. The retrieval part ensures that the responses are grounded in specific, detailed information, improving the relevance and accuracy of the answers. The generation part, on the other hand, allows the system to provide answers in a natural, conversational manner.

This technique is particularly useful for applications where the AI needs to provide detailed, accurate information that might not be contained in its initial training data. It's a way of expanding the AI's knowledge base in real-time to include more detailed or recent information.

Building Knowledge Base

Each of these data sources can significantly enrich the knowledge base of a RAG system, enabling it to deliver more accurate, contextually relevant, and useful responses tailored to the specific needs of the user or organization.

Files in PDF, Microsoft Word, Markdown, and other formats. Documents in common file formats like PDF, Word, or Google Docs, including reports, manuals, research papers, and memos. They provide a rich text-based information source for the RAG system.

Wikis in SharePoint, Zendesk, Atlassian Confluence, etc. Enterprise content management systems housing articles, wikis, project plans, company policies, and collaborative workspaces. These platforms offer a mix of structured and unstructured data useful for RAG systems.

Structured and unstructured data in private databases. Includes databases with structured data like customer records, transactions, and inventories, as well as unstructured data like emails and support tickets. This varied data is crucial for detailed, specific information retrieval.

Internal communication channels. Data from internal communication tools like Slack or Microsoft Teams, providing insights into organizational knowledge, ongoing projects, and common queries within a team.

Company websites. Information from official company websites and intranets, including news updates, product descriptions, FAQs, and internal announcements, offering a source of curated and updated information.

Scientific and technical databases. Specialized databases containing research papers, patents, and technical specifications, particularly valuable for research-intensive fields and organizations.

CRM systems. Data from CRMs, encompassing customer profiles, interaction histories, and sales data, which can be leveraged for personalized responses based on customer history.

Financial records and reports. Financial data such as budgets, expenditure reports, and financial statements, essential for organizations needing to provide financial-related information.

Regulatory and compliance documents. Documentation related to industry regulations, compliance guidelines, and legal requirements, key for RAG systems in regulated industries.

Training materials and educational content. Instructional content and training materials used within an organization, useful for RAG systems to provide instructional or explanatory responses.

Looking for more details? Check out our Crafting Technical Specifications for Custom ChatGPT Implementations blog post.

How We Work?

At our organization, we employ a dual-approach strategy to cater to diverse client needs and project complexities:

Code approach. For complex systems demanding high-quality solutions, we utilize a code-based approach built on top of Azure services.

This method allows for greater customization, scalability, and precision, making it ideal for clients with specific, intricate requirements.

The Azure platform provides robust infrastructure and security, ensuring reliable and secure operations:

Low/No code approach. For clients seeking a quicker deployment and ease of use,

we offer a low/no code approach. This method is integrated with platforms like Microsoft Copilot Studio, OpenAI GPTs, and OpenAI Assistants API.

While this approach is easier to start and more user-friendly, it may offer slightly less customization and depth compared to the code-based approach.

It's perfect for clients who need a more straightforward, cost-effective solution.

We pay special attention to model selection, as it significantly influences the overall cost and efficiency of the project.

We make informed decisions based on detailed analysis, and for further insights, we refer our clients to

Calculating OpenAI and Azure OpenAI Service Model Usage Costs blog post that elaborates on calculating model consumption.

This ensures transparency and helps in selecting the most cost-effective model for your needs.

To enhance the capabilities of Large Language Models (LLMs), we incorporate advanced tools like LangChain and Semantic Kernel.

For a comprehensive overview of our technological capabilities, we invite you to visit our Technologies page.

By combining these methodologies and tools, we ensure that our solutions are not only tailored to our clients' specific needs but also remain at the forefront of technological innovation and efficiency.